Gregory Cooke

I am currently a software engineer at Microsoft where I focus on performance and reliability for our core compute stack. Previously I was a research scientist and engineer at Pindrop where I focused on developing our machine learning system for fraud detection in the call center, as well as researching new opportunities and technologies to improve our products.

I graduated from the Georgia Institute of Technology with my Master's and Bachelor's in Electrical and Computer Engineering. I performed research in the field of multi-agent autonomous robotic systems, and my coursework focused on machine learning, analytics, computing, robotics, and advanced mathematics.

Experience

Software Engineer II

At Microsoft I am software engineer for Azure Core Compute on the Performance and Reliability Team. My work focuses on building high-scale, low-latency distributed systems to improve the performance, reliability, and user experience for VM Operations on Azure.

Research Scientist

At Pindrop, my work largely focused on our machine learning platform. This platform is an automated system for model training, optimization, and deployment that must be reliable and rigorously tested. Over time, I advanced from being a contributor to the lead developer for this platform and was responsible for compatibility, testing, and release planning with other component leads, as well as a gradual migration of the codebase from the Research department to the Engineering department. My most significant contribution to the platform was a large scale refactor of a legacy module, resulting in significantly cleaner, easier to maintain, tested code as well as a 50% reduction in total run-time for end-to-end model training.

I also designed and implemented a solution for automated infrastructure deployment and running model training algorithms at scale for the Cloud product using a combination of terraform, docker, and AWS services. This saved substantial employee time as it was previously a manual process, and standardized the machine learning platform’s dataflow for all of our cloud customers. This standardization allowed me to create a monitoring system for the ML platform’s performance as well.

As the lead researcher for Automatic Speech Recognition (ASR) and Natural Language Processing (NLP), I focused on longer horizon work around understanding the various approaches to these problems and experimenting with different ways they might be used in our products.

Graduate Research Assisstant

At GTRI, I worked in the Collaborative Autonomy group, a part of the Robotics and Autonomous Systems Division. I worked there full-time in Summer 2017 and 2018 and part-time while doing my Master’s coursework in Fall 2017, Spring 2018, and Fall 2018. While there, I worked on several projects that centered around distributed autonomous robotic systems.

In one project, I researched and developed biologically inspired, low-communication, decentralized algorithms for controlling autonomous swarms of unmanned aerial vehicles. Through testing these algorithms in the SCRIMMAGE simulator, I generated tens of gigabytes of data on which I performed varying analyses. This work resulted in publishing two peer-reviewed papers, one as first author. I presented the paper for which I was first author at the Practical Applications of Agents and Multi-Agent Systems (PAAMS) Conference in Toledo, Spain. This group at GTRI is also actively developing an open source simulator named SCRIMMAGE (Simulating Collaborative Robots in Massive Multi-Agent Game Execution). I was able to make several contributions to the simulator, including developing a pipeline for storing simulation data in a PostgreSQL database.

Publications

- Cooke, Gregory, et al. “Bio-inspired Nest-Site Selection for Distributing Robots in Low-Communication Environments.” International Conference on Practical Applications of Agents and Multi-Agent Systems. Springer, Cham, 2018.

- Bowers, Kenneth et al. “Trust-based Information Propagation on Multi-robot Teams in Noisy Low-communication Environments.” International Symposium on Distributed Autonomous Robotic Systems. 2018.

Intern

At Southern Company, I worked in the Transmission and Distribution R&D group. Most of my work was focused on a smart grid project called the Transmission Monitoring, Diagnostic, and Visualization Tool partnered with the Electric Power Research Institute. One of the big challenges was the enormous amount of manual data entry and configuration that was needed to add meaningful analytics to the existing system. One desired calculation was the variance between current measurement for the three lines for every sensor in the system, but this was estimated to need about a year of manual configuration work to set up. I developed a Python tool to automate this process that instead took only minutes to run.

I also worked on a distribution IoT project in which I configured and field tested a group of devices to create a radio frequency mesh network to support further developments in smart metering.

Undergraduate Researcher

I worked in the Electronic Materials Characterization Lab in the School of Materials Science and Engineering. I focused on how conductive nanoparticles could be used to coat polymers to make them conductive. My work comprised of creating dispersions with different concentrations of nanoparticles, coating polymers with them, then testing the resulting electrical properties of the polymers.

Education

Online Courses/Certifications

Udacity’s Deep Learning Nanodegree

Udacity’s Natural Language Processing Nanodegree

Biking vs. Driving Commute Analysis

Sometimes I bike to work, sometimes I drive to work. I was curious the differences between the two, so I collected data for a year and analyzed it!

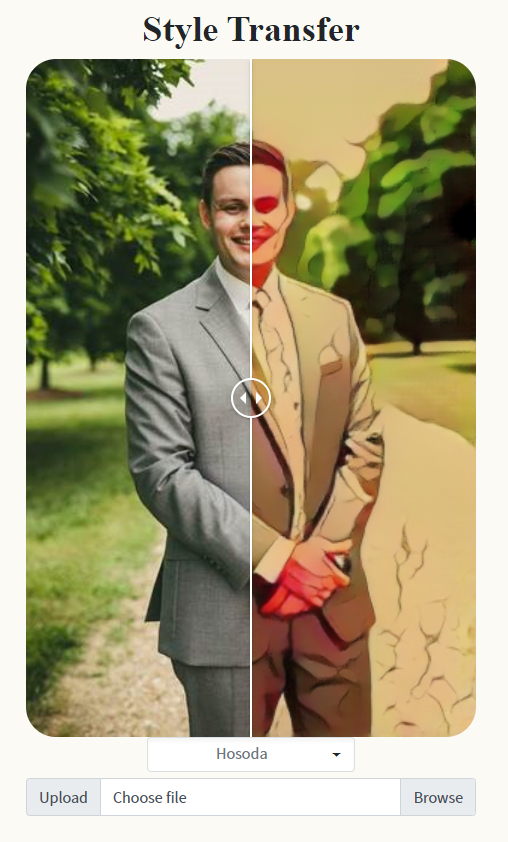

Fast Neural Style Transfer

This project is fun webapp that I built to practice serverless, web-based ML. The site is a static page written with Blazor/C#. When it receives an image, it fires off a request to an Azure Function written in Python that takes the input image and transforms it. Thus, it is a completely serverless approach. It is heavily inspired by Cartoonify - link in Read more.